Introduction

At the end of my latest blog post, I promised that I would talk about how to perform constrained maximization using unconstrained optimizers. This can be accomplished by employing clever transformation and nice properties of maximum likelihood estimators – see this fantastic post from the now defunct 🙁 Econometrics Beat. Eventually I will get around to discussing more of the statistical details behind this approach and its implementation from scratch in R.

RIGHT NOW, I want to talk about how to obtain standard errors for Gaussian mixture model parameters estimated using the EM algorithm. So far, I’ve written two posts with respect to Gaussian mixtures and the EM algorithm:

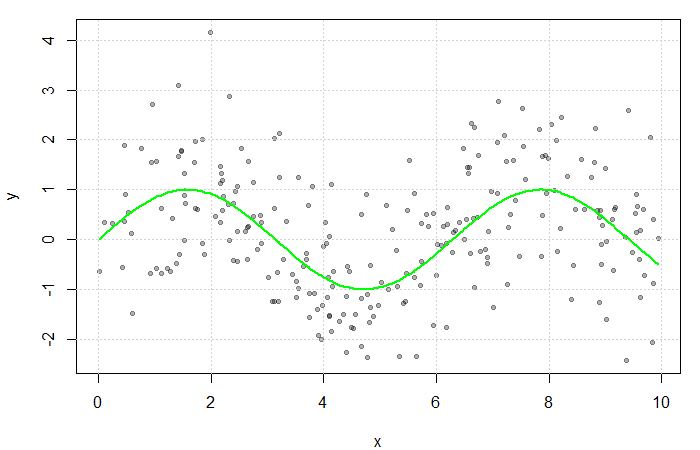

- Embracing the EM algorithm: One continuous response, which motivates the theory behind the EM algorithm using a two component Gaussian mixture model; and

- EM Algorithm Essentials: Maximizing objective functions using R’s optim, which demonstrates how to implement log-likelihood maximization from scratch using the

optimfunction.

Both posts so far have focused only on POINT ESTIMATION! That is, obtaining estimates of our mixture model parameters.

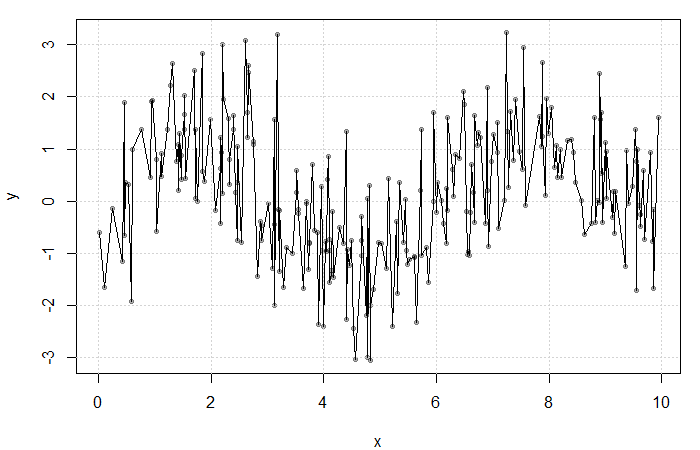

Any estimate we obtain using any statistical method has some uncertainty associated with it. We quantify the uncertainty of a parameter estimate by its STANDARD ERROR. If we repeated the same experiment with comparable samples a large number of times, the standard error would reflect how much our estimates differ from experiment to experiment. Indeed, we would estimate the standard error as the standard deviation of the effect estimates across experiments. If we can understand how our estimates vary across experiments, i.e., estimate their standard errors, we can perform statistical inference by testing hypotheses or constructing confidence intervals, for example.

We usually do not need to conduct all of these experiments because theory tells us what form this distribution of effect estimates takes! We refer to this distribution as the SAMPLING DISTRIBUTION. When the form of the sampling distribution is not obvious, we may use resampling techniques to estimate standard errors. The EM algorithm, however, attempts to obtain maximum likelihood estimates (MLEs) which have theoretical sampling distributions. For well-behaved densities, MLEs can be shown to be asymptotically normally distributed and unbiased with a variance-covariance matrix equal to the inverse of the Fisher Information matrix, i.e., a function of the second derivatives of the log-likelihood or equivalently, the square of the first derivatives…

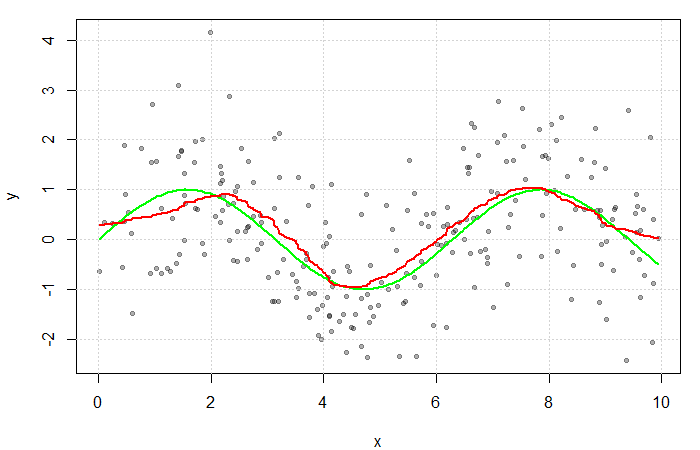

The tricky bit with the EM algorithm is we don’t maximize the observed log-likelihood directly to estimate Gaussian mixture parameters. Instead, we maximize a more convenient objective function. People much smarter than I have figured out this can give you the same answer. A question remains: if standard errors are estimated using the second derivative of the log-likelihood, but we used the objective function, how do we properly quantify uncertainty? Particularly as the derivatives of the log-likelihood are not straight-forward, for the same reasons that make optimization difficult.

Isaac Meilijson, another person smarter than me, provides us with a wealth of guidance in his 1989 paper titled A Fast Improvement to the EM Algorithm on its Own Terms (I love this title). In this blog post, we summarize and demonstrate how to construct simple estimators of the observed information matrix, and subsequently the standard errors of our EM estimates, when we have independent and identically distributed responses arising from a two-component Gaussian mixture model. Essentially, due to some nice properties, we can simply use the derivatives of our objective function, in lieu of our log-likelihood, to estimate the observed information. Standard errors computed using the observed information are then compared to those obtained using numerical differentiation of the observed log-likelihood.